“Zero-ETL” isn’t a promise that transformation disappears; it’s a shift in where the heavy lifting happens. Instead of building and babysitting brittle copy jobs, the platform continuously reflects source changes into your analytics lake—so teams spend more time modeling for the business and less time fighting pipelines.

What Mirroring actually is (and isn’t)

Microsoft Fabric Mirroring continuously replicates databases and selected tables from operational or external systems into OneLake, giving you a low-latency, read-optimized copy in Delta/Parquet that every Fabric persona can consume. It’s a managed, SaaS-style replication—no custom CDC plumbing, no queue farms, no “landing zones” to stitch.

The “zero-ETL” claim is pragmatic here: the service keeps data current in OneLake without you orchestrating extracts and loads. You still model, cleanse, and conform—but against a continuously fresh mirror rather than fragile one-off copies.

Crucially, Mirroring is not virtualization. Shortcuts point to external data; Mirroring brings it into OneLake with change propagation, enabling Direct Lake BI, SQL endpoints, notebooks, and governed sharing downstream.

Why pipeline toil collapses

1) CDC and copy orchestration disappear. The service tracks changes at the source and reflects them in OneLake—no nightly jobs to schedule, retry, or de-duplicate. Teams retire swaths of DAGs whose only purpose was “keep a faithful copy over here.”

2) Fewer failure modes, fewer moving parts. No agent farms or custom connectors. Less code and infra mean fewer credential expiries, schema-drift breakages, and transient retries to babysit. Fabric’s mirrored databases show up as first-class items with SQL analytics endpoints and shareable permissions.

3) One physical copy, many consumers. Because the mirror lands as Delta/Parquet in OneLake, every workload (BI, data science, ELT) hits the same persisted copy—often in Direct Lake, eliminating scheduled “dataset refreshes.” The effect is organizational: consolidation of sources and reduction of parallel one-off feeds.

4) Multi-cloud consolidation without re-engineering. For estates running Snowflake, Mirroring adds those databases to OneLake with continuous sync—so Power BI/Fabric workloads can standardize on a single lake without uprooting what already works. It’s a pragmatic bridge rather than a forced migration.

5) Broad source reach via Open Mirroring. Beyond Azure SQL and Snowflake, the Open Mirroring ecosystem extends to SaaS and enterprise apps (e.g., Salesforce, SAP, NetSuite) through partners—again, without building and caring for bespoke connectors.

Collectively, these shifts are why organizations see material reductions in pipeline maintenance: fewer DAGs, fewer secrets, fewer retries, fewer handoffs. The percentage will vary by estate, but the mechanisms for double-digit gains are real.

Patterns that unlock the value

Pattern 1 — “Mirror as Bronze.” Treat the mirrored database as the authoritative Bronze. It’s already raw-faithful and continuously fresh; downstream curation (business keys, conforming dimensions, data quality) happens in Warehouse/Lakehouse SQL over Delta. The pipeline layer shrinks to modeling, not copy logistics.

Pattern 2 — Real-time BI without refresh. Mirrored Delta in OneLake + Direct Lake semantic models means dashboards track the source with minimal latency—no nightly import/refresh windows and fewer staleness incidents during business hours. (And you keep a SQL endpoint for ad-hoc analysis.)

Pattern 3 — Multi-cloud “No-regrets” bridge. Keep Snowflake as the system of record for parts of the estate while mirroring into OneLake to standardize governance, lineage, and BI. This reduces cross-platform friction and avoids duplicate ingestion frameworks while you rationalize long-term architecture.

Pattern 4 — Operational analytics without OLTP tax. Mirror Azure SQL (and increasingly, SQL Server behind firewalls) to OneLake for near real-time operational reporting. The OLTP stays focused on transactions; analytics ride the mirror.

Pattern 5 — Open Mirroring for “SaaS sprawl.” Use partner-backed Open Mirroring to reflect CRM/ERP changes into OneLake, then apply uniform governance and modeling once—rather than babysitting a zoo of app-specific connectors and brittle incremental jobs.

Where “zero-ETL” stops

Mirroring kills copy-jobs toil; it doesn’t eliminate data work. Expect to still:

- Define data contracts and business keys across mirrored tables.

- Handle deletions, late-arriving facts, and Type-2 history where the business needs it.

- Conform reference data and standardize semantics across sources.

- Curate PII and apply policy-driven masking/row-level controls in downstream layers.

In other words, the engineering effort moves up the stack—from moving bytes to modeling meaning.

Mirror vs. Traditional Ingest

| Use Mirroring when… | Prefer bespoke ingest when… |

|---|---|

| The goal is continuous reflection of a database/warehouse into OneLake for broad consumption. | The source isn’t supported (yet), or you need a highly specialized extract/transform contract. |

| Low-ops matters: minimize schedules, retries, credentials, and connector sprawl. | You require heavy pre-landing transformations or complex, multi-hop pipelines. |

| You want Direct Lake BI and unified governance over a single physical copy. | You must land in a non-Delta format or a different storage target for regulatory/legacy reasons. |

Risks, realities, and governance

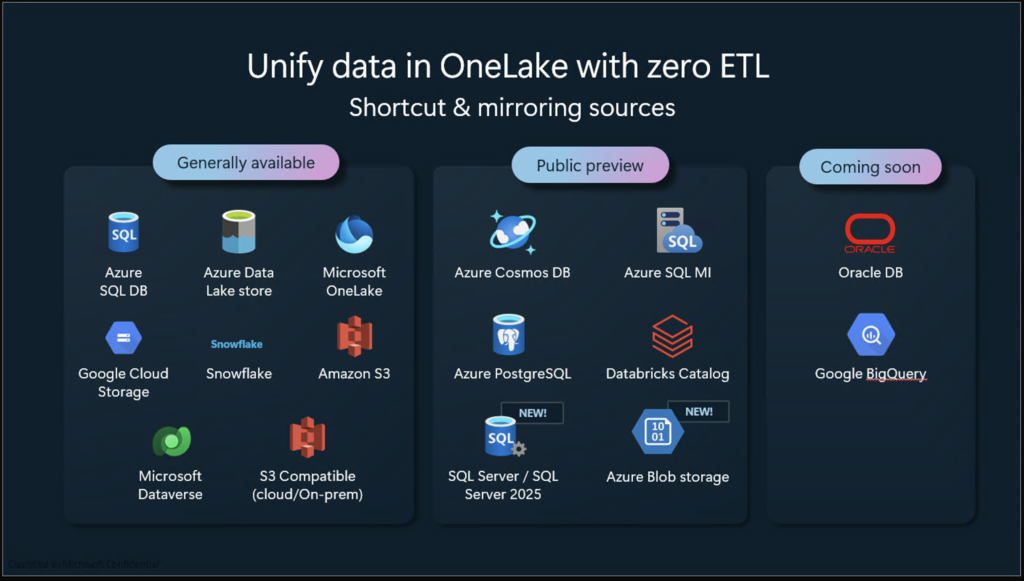

- Source coverage and constraints. Supported sources continue to expand (Azure SQL, Snowflake GA; SQL Server scenarios emerging; Open Mirroring partners for enterprise/SaaS). Align expectations with what’s officially supported for your latency, DDL drift, and throughput needs.

- Schema change semantics. Mirroring tracks and applies changes, but downstream models must be resilient to evolving shapes. Treat the mirror as a living contract, not a frozen extract.

- Security & sharing. Mirrored databases are first-class items with shareable permissions and a SQL endpoint; centralize access there and keep source systems insulated.

- Cost optics. You’ll trade batch copy costs for continuous replication and lake storage. The gain is predictability and a simpler estate; verify with pilot telemetry. (The “bill of materials” is easier to reason about when the platform does the moving.)

The net-net: Mirroring turns “data movement” from a garden of scripts into a platform service. That one architectural decision—reflect, don’t re-build—erases a surprising amount of operational drag. The work that remains is the work that actually differentiates the business: modeling, quality, and semantics.

If “zero-ETL” sounds like marketing, read it as “zero pipeline babysitting.” That’s the part teams are happiest to lose—and where the 60% reduction in toil comes from in practice.

Leave a Reply