Whether it’s fraud detection in banking, predictive maintenance in manufacturing, or personalized recommendations in e-commerce, real-time data ingestion plays a critical role in making instant, data-driven decisions.

This guide explores what real-time data ingestion is, why it matters, when to use it, the different approaches available, and how Microsoft Fabric enables real-time data processing at scale.

What is Real-Time Data Ingestion?

Real-time data ingestion refers to the continuous process of capturing, processing, and storing data as it is generated, rather than in scheduled batches. Unlike traditional batch processing, where data is collected and processed at fixed intervals, real-time ingestion ensures data is immediately available for analytics, monitoring, and automation.

Key Characteristics of Real-Time Data Ingestion:

✅ Low Latency: Data is processed in milliseconds or seconds.

✅ Streaming Data Sources: IoT devices, sensors, social media feeds, application logs, financial transactions, etc.

✅ Continuous Data Flow: Instead of waiting for batch processing, data flows in a steady stream to analytics systems.

Example Use Cases:

- Fraud detection in banking (flagging suspicious transactions instantly).

- Real-time monitoring of IoT sensors in smart factories.

- Personalized content recommendations based on live user interactions.

Why Real-Time Data?

As businesses operate in a highly dynamic environment, real-time data is becoming a key differentiator in enabling faster decision-making and automation.

1. Faster Decision-Making

Traditional batch processing delays insights by minutes or hours, whereas real-time data enables organizations to react instantly to emerging trends and anomalies.

Example: Financial markets rely on real-time analytics to detect and respond to stock price fluctuations within seconds.

2. Enhanced Customer Experiences

Companies can deliver hyper-personalized experiences by analyzing user behavior in real-time.

Example: Streaming platforms like Netflix and Spotify use real-time data to recommend content based on live viewing patterns.

3. Operational Efficiency

Industries such as manufacturing and logistics benefit from real-time monitoring and predictive analytics to reduce downtime and optimize supply chains.

Example: Smart factories use IoT sensors to track machine performance and predict failures before they occur.

4. Improved Security and Compliance

Real-time monitoring helps detect cyber threats, fraud, and policy violations before they escalate.

Example: Banks use real-time fraud detection systems to block fraudulent transactions as they happen.

When to Consider Real-Time Data?

While real-time data ingestion offers many advantages, it may not always be necessary for every use case. Here are the key scenarios where real-time data is essential:

1. Time-Sensitive Business Processes

- Stock trading, fraud detection, real-time bidding in ad tech.

2. IoT and Sensor-Based Applications

- Smart cities, connected vehicles, predictive maintenance in industrial machines.

3. Live Customer Interactions

- Chatbots, recommendation engines, real-time customer support analytics.

4. Continuous System Monitoring and Anomaly Detection

- Cybersecurity monitoring, infrastructure health checks, compliance tracking.

When NOT to Use Real-Time Data?

- If latency is not critical (e.g., monthly sales reports, historical trend analysis).

- If infrastructure costs outweigh the benefits (real-time processing can be expensive).

- If data is inherently static (e.g., census data, archival records).

Approaches for Real-Time Data Ingestion

There are multiple approaches to ingesting real-time data, depending on the source, latency requirements, and processing needs.

1. Streaming Data Pipelines

Definition: Data flows continuously from the source to a processing engine and then to storage and analytics systems. Technologies: Apache Kafka, Azure Event Hub, Google Pub/Sub, AWS Kinesis.

✅ Best for: Log processing, IoT sensor data, event-driven applications.

2. Change Data Capture (CDC)

Definition: Captures changes in a database (INSERT, UPDATE, DELETE) and streams them to downstream systems. Technologies: Debezium, Azure Change Feed, AWS DMS.

✅ Best for: Replicating databases, syncing data across platforms, real-time reporting.

3. Real-Time ETL (Extract, Transform, Load)

Definition: Instead of traditional batch ETL, real-time ETL processes data in motion. Technologies: Apache Flink, Spark Streaming, AWS Glue Streaming, Azure Data Factory.

✅ Best for: Processing and transforming large-scale streaming data in real-time.

4. Webhooks & API-Based Ingestion

Definition: Uses APIs to ingest event-driven data in near real-time. Technologies: REST APIs, WebSockets, Server-Sent Events (SSE).

✅ Best for: Live user events, transactional systems, chat applications.

Each approach serves different use cases, and selecting the right method depends on latency needs, data volume, and processing complexity.

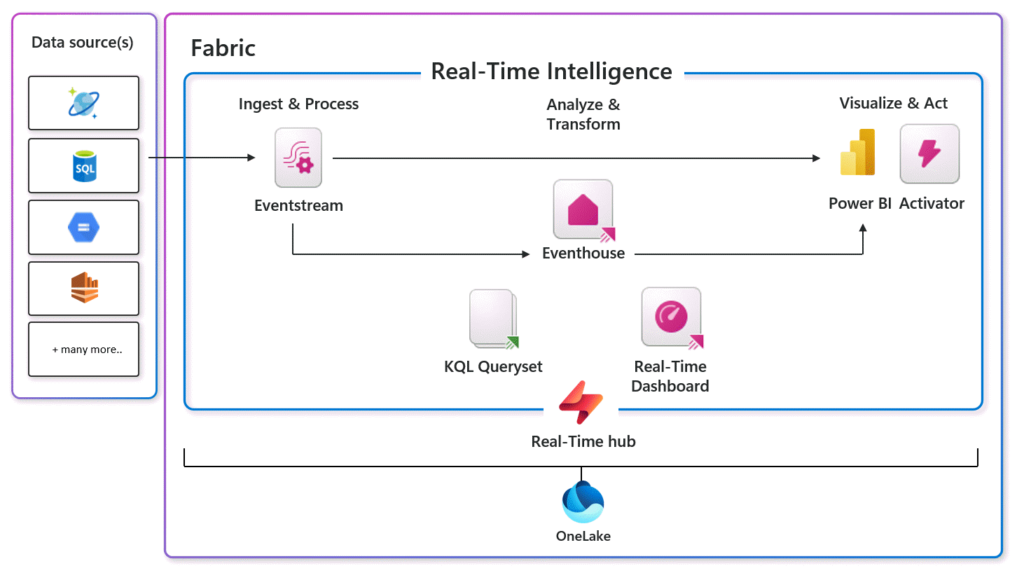

Real-Time Data Ingestion with Microsoft Fabric

Microsoft Fabric stands out as a holistic, enterprise-grade platform for managing real-time data ingestion and analytics.

Why Choose Microsoft Fabric for Real-Time Data Ingestion?

| Feature | Benefit |

|---|---|

| Unified Data Fabric | Integrates real-time, batch, and historical data into a single architecture. |

| OneLake Storage | Eliminates redundant copies and optimizes real-time data access. |

| Eventstream & Kusto DB | Enables fast, scalable event streaming and real-time querying. |

| Fabric Pipelines | Automates data ingestion, transformation, and orchestration. |

| Microsoft Purview | Provides built-in governance, security, and compliance for real-time data. |

| Power BI & Synapse ML | Delivers AI-powered insights from real-time data streams. |

By leveraging Microsoft Fabric, businesses can ingest, process, govern, and analyze real-time data efficiently, making it easier to drive AI-powered decision-making, optimize operations, and enhance customer experiences.

1. Event Streaming with Kusto DB and Eventstream

For real-time analytics to be effective, businesses need a streaming-first architecture that can handle high-velocity, time-sensitive data. Microsoft Fabric achieves this through Eventstream and Kusto DB, which work together to:

Eventstream – The Foundation for Streaming Data

- Acts as a real-time data pipeline, ingesting streaming data from multiple sources, such as IoT devices, application logs, financial transactions, and social media feeds.

- Supports event-driven architectures, ensuring that insights are generated as soon as new data arrives.

- Can route streaming data to different Fabric services like Kusto DB, OneLake, and Power BI for analysis.

- Key Benefit: Reduces complexity by unifying real-time data processing and eliminating the need for external streaming platforms like Kafka.

Kusto DB – Purpose-Built for High-Throughput, Real-Time Querying

- Optimized for large-scale, low-latency analytics, making it ideal for log analytics, telemetry, security monitoring, and anomaly detection.

- Uses Kusto Query Language (KQL) to execute real-time queries on streaming datasets faster than traditional SQL-based approaches.

- Key Benefit: Enables businesses to track and analyze time-sensitive data in real-time, reducing the time-to-insight from hours to seconds.

✅ Use Cases:

- IT Operations Monitoring: Detecting anomalies and system failures instantly.

- Cybersecurity Analytics: Identifying potential threats and intrusions in real time.

- IoT Device Data Processing: Monitoring connected devices and predicting maintenance needs.

2. OneLake – Unified Storage for Real-Time Data

Unlike traditional architectures that require separate data lakes and warehouses, Microsoft Fabric introduces OneLake—a single, unified data lake for all organizational data.

How OneLake Enhances Real-Time Ingestion:

- Ingests real-time data without duplicating it, ensuring high-speed access to streaming records.

- Supports both structured and unstructured data, making it easy to store JSON, Parquet, CSV, and more.

- Seamlessly integrates with other Fabric components, such as Eventstream, Kusto DB, and Data Factory, to create an end-to-end real-time analytics workflow.

- Key Benefit: Eliminates the need for complex ETL pipelines by offering direct access to streaming data through a single, governed data lake.

✅ Use Cases:

- Real-Time Financial Transactions: Capturing and analyzing bank transactions for fraud detection.

- E-Commerce Clickstream Analysis: Tracking user behavior across digital platforms to personalize experiences.

- Smart Grid Data Processing: Managing energy consumption data from smart meters in real-time.

3. Fabric Pipelines – Automating Data Processing in Real-Time

Real-time data ingestion isn’t just about capturing raw data—it requires real-time transformation, enrichment, and processing to extract valuable insights.

Fabric Pipelines offer:

- Low-Code and No-Code Data Workflows: Business users can create and manage real-time ingestion flows without deep technical expertise.

- Automated Data Cleansing & Enrichment: Data is normalized, validated, and prepared for analysis as it streams in.

- Event-Driven Processing: Data pipelines trigger real-time transformations based on incoming data patterns.

- Key Benefit: Enables businesses to automate real-time ETL processes and reduce manual intervention.

✅ Use Cases:

- Retail Inventory Management: Auto-updating stock levels based on real-time sales data.

- Healthcare Monitoring: Streaming patient vitals and identifying anomalies for instant alerts.

- Social Media Sentiment Analysis: Analyzing trending topics as they emerge in real-time.

4. Governance & Security with Microsoft Purview

One of the biggest challenges with real-time data ingestion is ensuring security, compliance, and governance, especially when handling sensitive financial, healthcare, or personal data.

Microsoft Purview integrates directly with Fabric to provide:

- End-to-End Data Lineage: Tracks where real-time data comes from, how it’s transformed, and where it’s used.

- Role-Based Access Control (RBAC): Prevents unauthorized access to sensitive real-time data streams.

- Automated Compliance Monitoring: Ensures real-time data processing aligns with GDPR, HIPAA, and other regulatory requirements.

- Key Benefit: Governs real-time data at scale, reducing risks while maintaining transparency and security.

✅ Use Cases:

- Financial Services: Enforcing compliance on real-time banking transactions.

- Healthcare & Life Sciences: Securing streaming patient data for AI-driven diagnostics.

- Manufacturing: Ensuring sensitive IoT sensor data remains encrypted and protected.

5. AI-Powered Insights with Power BI & Machine Learning

Once real-time data is ingested, transformed, and secured, organizations need a way to analyze and act on it quickly.

Power BI for Real-Time Dashboards & Visualization

- Connects directly to real-time data sources, enabling instant visualization of live data.

- Supports anomaly detection and trend analysis, allowing businesses to make proactive decisions.

- Key Benefit: Empowers business users with AI-enhanced, real-time insights without requiring deep technical expertise.

AI & Machine Learning in Microsoft Fabric

- Enables real-time AI-driven decision-making with Synapse ML and AutoML integration.

- Detects anomalies, predicts trends, and automates responses to streaming events.

- Key Benefit: Transforms real-time data into predictive intelligence, enabling businesses to move beyond monitoring to proactive intervention.

✅ Use Cases:

- Predictive Maintenance in Manufacturing: Using AI to forecast machine failures based on real-time sensor data.

- Fraud Detection in Banking: Identifying fraudulent transactions within milliseconds using AI models.

- Customer Retargeting in E-Commerce: Delivering personalized promotions in real-time based on user behavior.

Unlocking the Power of Real-Time Data

Real-time data ingestion is redefining industries by enabling faster insights, better customer experiences, and proactive decision-making. However, adopting real-time data requires careful consideration of infrastructure, latency needs, and governance frameworks.

With Microsoft Fabric, businesses can simplify real-time data ingestion, integrate streaming sources seamlessly, and apply AI-driven analytics to gain actionable insights in seconds, not hours.

Next Steps:

- Evaluate your organization’s need for real-time data ingestion.

- Explore Microsoft Fabric’s real-time data capabilities.

- Implement a proof of concept (POC) to test real-time processing in action.

By leveraging real-time data ingestion, businesses can stay ahead of the competition, enhance operational efficiency, and future-proof their analytics infrastructure.

Leave a Reply