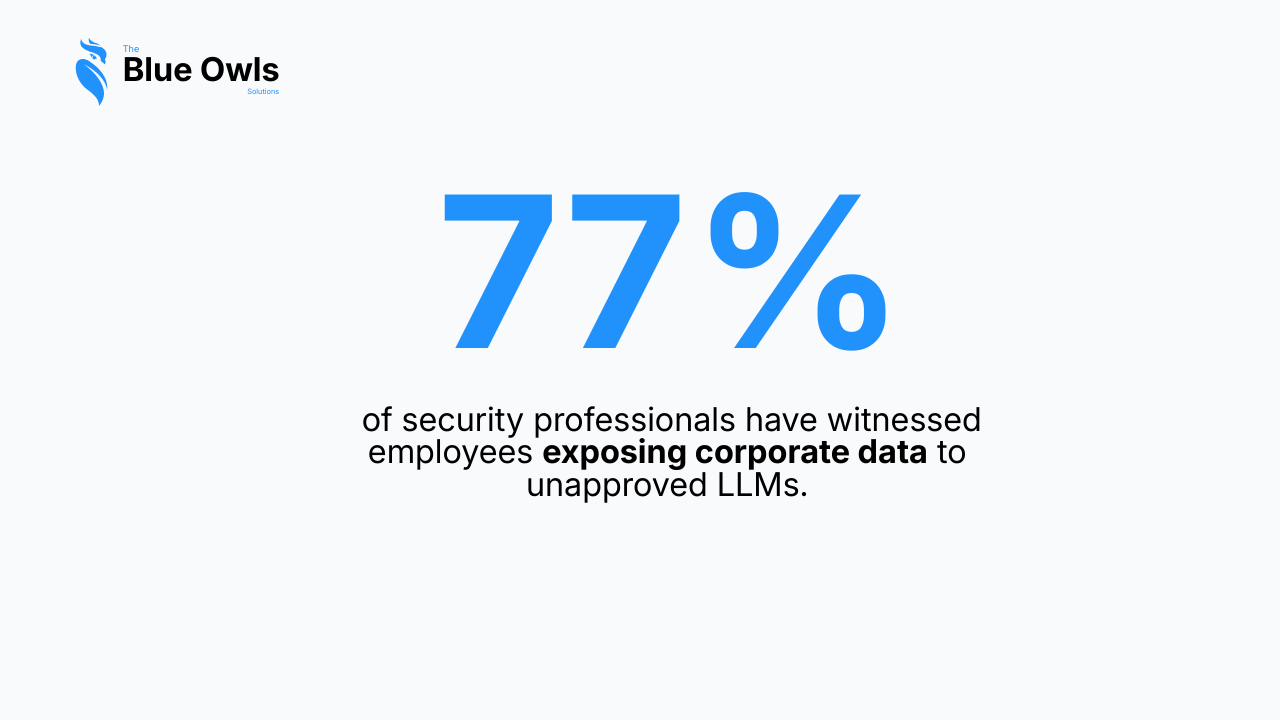

A recent report from Computer Weekly revealed a staggering statistic: 77% of security professionals have witnessed employees exposing corporate data to unapproved Large Language Models (LLMs). For those of us in the trenches of digital transformation, this isn’t just a security metric—it’s a massive signal. It confirms that Shadow AI has arrived, and it is a far more volatile cousin to the Shadow IT we’ve spent the last decade managing.

The Shift from Shadow IT to Shadow AI

In the era of Shadow IT, the risk was primarily about software. Employees used unapproved project management tools or cloud storage. The risk was fragmented data and unmanaged spend.

Shadow AI is different. It’s not just about the tool; it’s about the intelligence cycle. When an employee pastes a proprietary “Product Requirements Document” into a public LLM to summarize it, they aren’t just using unapproved software—they are actively feeding corporate IP into external models that cannot be audited, controlled, or retracted.

The Productivity Paradox

It is a mistake to view Shadow AI as a “rogue employee” problem. Most employees aren’t acting with malice; they are acting with ambition.

They are reaching for these tools because they have a job to do, and the tools provided by the organization aren’t keeping up. When we simply “ban” AI, we create a productivity vacuum. Employees will always find a way to work faster, even if it means going “off-grid.”

The Strategic Lever: Information Architecture

The companies winning the AI race in 2026 aren’t the ones with the strictest “No AI” policies. They are the ones making the governed path the fast path.

The secret isn’t just a better chatbot; it’s a better Information Architecture (IA). Generic, off-the-shelf AI tools lack three things essential to your business:

- Your Data: Your specific customer history and proprietary research.

- Your Processes: How your teams actually move projects from A to B.

- Your Taxonomy: The specific language, acronyms, and compliance rules of your industry.

An internal AI solution built on a governed data foundation doesn’t just reduce risk—it outperforms consumer tools because it actually understands the context of your organization.

5 Pillars of a Productive AI Governance Strategy

To move from reactive “firefighting” to strategic AI adoption, organizations should focus on these five areas:

1. Radical Visibility

You cannot protect what you cannot see. Use CASB (Cloud Access Security Broker) tools and network monitoring to understand which models your employees are using. Don’t use this data to punish; use it to identify where the demand for AI is highest.

2. The “Better Than Public” Alternative

If you want employees to stop using public LLMs, give them an internal version that is more useful. This means integrating AI directly into the tools they already live in—Microsoft Teams, Slack, or Power BI. If the internal tool is more convenient and has access to their files, they won’t feel the need to go elsewhere.

3. Data Hygiene as Security

Shadow AI thrives on messy data. By cleaning your data and applying strict permissioning (ensuring an AI can only “see” what the user has permission to see), you turn your information architecture into a protective shield.

4. Cross-Functional “Enablement” Teams

Move away from the “AI Council” that only says no. Create a cross-functional team (Security, Legal, IT, and Business Units) focused on enabling safe use cases. Their goal should be to vet and approve high-value AI workflows within 48 hours, not 48 days.

5. Automated Governance

Governance shouldn’t be a manual check-box at the end of a project. It should be built into the platform. This includes:

- Prompt Logging: Auditing what is being asked.

- PII Redaction: Automatically stripping personal data before it hits a model.

- Source Attribution: Ensuring the AI tells you exactly which internal document it got its answer from.

Read More: Data Governance in Microsoft Fabric with Purview

Conclusion: 2027 Belongs to the Governed

The gap between companies that “allow” AI and companies that “architect” AI is widening.

In 2026, the priority is solving the Shadow AI leak. But the long-term reward is much greater. By building a secure, internal AI ecosystem, you are creating a proprietary engine that speaks your company’s language and protects your most valuable asset: your collective intelligence.

The companies that solve this today will be the ones scaling with total confidence in 2027.

Leave a Reply